Rocking the AI World

Fueling the AI Revolution, Revolutionizing the AI Sphere

Social media is flooded with AI Agents, they can drive innovation, capturing the world's attention.

AI mimics human senses through technologies like cameras (vision) and microphones (hearing), enabling machines to perceive the world. Beyond individual senses, its multimodal capabilities—integrating vision and language, for instance—enhance fields like healthcare and robotics. However, AI falls short of human consciousness, lacking the self-awareness and ethical depth of the thought/mind faculty. These limitations raise broader concerns about its unchecked development, from algorithmic bias to existential risks.

Let us explore the basics, to understand how AI multimodal capabilities can be leveraged to complement a few of our human capabilities.

Generates images from textual descriptions using models like diffusion networks, GANs, or transformer-based architectures.

Transforms an input image into a modified output—such as style transfer, inpainting, or outpainting—often employing conditional GANs or diffusion models.

Converts text into natural-sounding audio, typically through text-to-speech (TTS) systems that combine acoustic modeling with vocoding techniques.

Transcribes spoken language into written text using automatic speech recognition (ASR) systems, which may utilize CTC-based models or transformer architectures.

Generates dynamic video sequences from textual prompts by synthesizing coherent frames over time, often leveraging advanced diffusion models, GANs, or autoregressive methods.

Several paradigms have emerged to generate images from either noise or textual descriptions. The most common methods include:

Note: These AI-generated variations are crafted by AI models with adherence to evolving copyright laws and ethical guidelines, ensuring responsible use of advanced models.

"Search that understands user intent and contextual meaning of queries."

"AI models trained to understand and generate text."

Text-to-image models (like DALL·E or Stable Diffusion) take a natural language description and generate an image that reflects that prompt. These systems typically use a combination of a text encoder (such as CLIP) and a generative model.

Below is a Python code using the Hugging Face diffusers library to demonstrate text-to-image generation with Stable Diffusion.

# Install necessary libraries (if not already installed)

# !pip install diffusers transformers torch

from diffusers import StableDiffusionPipeline

import torch

# Load the pre-trained Stable Diffusion model

model_id = "CompVis/stable-diffusion-v1-4"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda") # Use GPU if available for faster inference

# Define a text prompt

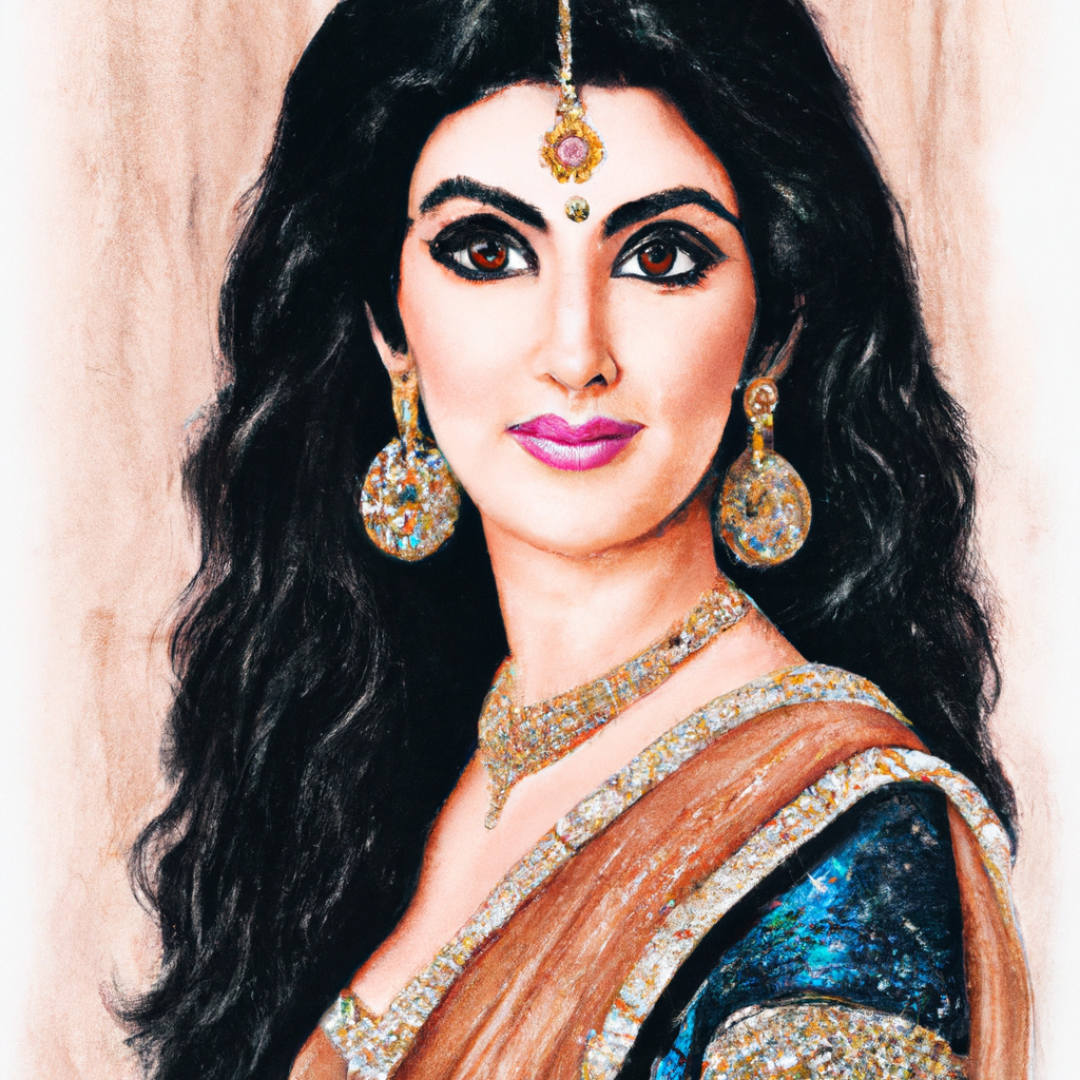

prompt = "A realistic photo of Bollywood actor Raj Kapoor showman in the Movie Mera Naam Joker"

# Generate an image based on the prompt

result = pipe(prompt)

image = result.images[0]

# Save or display the image

image.save("ai_generated_image.png")

image.show()

GANs consist of a generator and a discriminator that are trained in a minimax game. The generator maps a random noise vector from a latent space to an image, while the discriminator distinguishes between real and generated images.

Below is a simplified Python code using PyTorch for generating MNIST digits:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

# Hyperparameters

latent_dim = 100

batch_size = 64

epochs = 20

lr = 0.0002

# Data loader for MNIST

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

mnist = datasets.MNIST(root='./data', train=True, download=True, transform=transform)

dataloader = torch.utils.data.DataLoader(mnist, batch_size=batch_size, shuffle=True)

# Generator network

class Generator(nn.Module):

def __init__(self, latent_dim):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(latent_dim, 128),

nn.ReLU(inplace=True),

nn.Linear(128, 784),

nn.Tanh() # Outputs in range [-1, 1]

)

def forward(self, z):

img = self.model(z)

img = img.view(img.size(0), 1, 28, 28)

return img

# Discriminator network

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(784, 128),

nn.LeakyReLU(0.2, inplace=True),

nn.Linear(128, 1),

nn.Sigmoid() # Probability output

)

def forward(self, img):

img_flat = img.view(img.size(0), -1)

validity = self.model(img_flat)

return validity

# Initialize networks

generator = Generator(latent_dim)

discriminator = Discriminator()

# Loss and optimizers

adversarial_loss = nn.BCELoss()

optimizer_G = optim.Adam(generator.parameters(), lr=lr)

optimizer_D = optim.Adam(discriminator.parameters(), lr=lr)

# Training loop

for epoch in range(epochs):

for i, (imgs, _) in enumerate(dataloader):

batch_size_current = imgs.size(0)

# Real and fake labels

real = torch.ones(batch_size_current, 1)

fake = torch.zeros(batch_size_current, 1)

# Train Discriminator

optimizer_D.zero_grad()

real_pred = discriminator(imgs)

loss_real = adversarial_loss(real_pred, real)

z = torch.randn(batch_size_current, latent_dim)

gen_imgs = generator(z)

fake_pred = discriminator(gen_imgs.detach())

loss_fake = adversarial_loss(fake_pred, fake)

loss_D = (loss_real + loss_fake) / 2

loss_D.backward()

optimizer_D.step()

# Train Generator

optimizer_G.zero_grad()

gen_pred = discriminator(gen_imgs)

loss_G = adversarial_loss(gen_pred, real)

loss_G.backward()

optimizer_G.step()

if i % 200 == 0:

print(f"Epoch [{epoch+1}/{epochs}] Batch {i}/{len(dataloader)} Loss D: {loss_D.item():.4f}, Loss G: {loss_G.item():.4f}")

# Visualize generated images

z = torch.randn(16, latent_dim)

gen_imgs = generator(z).detach().numpy()

gen_imgs = (gen_imgs + 1) / 2 # Rescale from [-1, 1] to [0, 1]

fig, axes = plt.subplots(2, 8, figsize=(12, 3))

for i, ax in enumerate(axes.flatten()):

ax.imshow(gen_imgs[i][0], cmap="gray")

ax.axis("off")

plt.show()

Note: These AI-generated variations are crafted by AI models with adherence to evolving copyright laws and ethical guidelines, ensuring responsible use of advanced models.

"AI systems capable of autonomous decision-making."

"The presence of unfair or discriminatory patterns in AI models due to biased training data or flawed algorithms, impacting fairness and inclusivity."

Diffusion models generate images by starting with pure noise and iteratively refining it. The process involves:

Below is high-level pseudo-code to illustrate the diffusion process:

# Pseudo-code for diffusion process:

# x_0 is a real image, T is the total number of diffusion steps

# Forward process: add noise incrementally

def forward_diffusion(x_0, T):

x_t = x_0

for t in range(1, T+1):

noise = sample_noise() # e.g., from a Gaussian distribution

x_t = sqrt(alpha_t) * x_t + sqrt(1 - alpha_t) * noise

return x_t

# Reverse process: denoise step-by-step (learned by a neural network)

def reverse_diffusion(x_T, T, model):

x_t = x_T

for t in reversed(range(1, T+1)):

predicted_noise = model(x_t, t)

x_t = (x_t - sqrt(1 - alpha_t) * predicted_noise) / sqrt(alpha_t)

return x_t

In practice, the parameters (such as alpha_t) are carefully scheduled, and robust libraries like Hugging Face's diffusers provide production-ready implementations.

Imagen3 is Google Research's cutting-edge text-to-image diffusion model that advances photorealistic image synthesis. By combining a powerful transformer-based text encoder with a refined diffusion process, Imagen3 translates natural language prompts into high-quality, semantically rich images. The model leverages large-scale training on high-quality image-text pairs, enabling it to generate images with impressive detail and creativity.

As detailed on the Imagen research page and described in the paper "Imagen: Photorealistic Text-to-Image Diffusion Models", Imagen3 improves upon earlier diffusion models by refining both the architectural design and the training methodology. These improvements result in enhanced semantic understanding and visual fidelity, making it a state-of-the-art solution for text-to-image generation.

This section demonstrates how to use Google Cloud Platform's Imagen3 model for generating images from text prompts. The Colab notebook provides a step-by-step guide to installing the necessary libraries, authenticating with your API key, and generating high-quality images using Google's latest generative AI technology.

# Install the required library (if not already installed)

!pip install google-cloud-generative-ai

# Import the library and initialize with your API key

import generativeai as genai

genai.initialize(api_key='YOUR_API_KEY')

# Define your text prompt

prompt = "A futuristic cityscape at dusk with vibrant neon lights"

# Generate an image using Imagen3

response = genai.generate_image(prompt=prompt, model="imagen3")

image = response.image # Retrieve the generated image

image.show()

For a complete walkthrough, check out the Imagen3 Image Generation Colab Notebook .

Image-to-image translation involves transforming an input image into an output image that is altered according to a desired transformation or style. There are several techniques used in this field:

The code below demonstrates how to use the Stable Diffusion Img2Img pipeline from the diffusers library to transform an input image. The process takes an input image and a text prompt to generate a new image that incorporates the characteristics of the prompt while preserving aspects of the original image.

from diffusers import StableDiffusionImg2ImgPipeline

import torch

from PIL import Image

def generate_img2img(prompt, input_image_path, output_image_path, strength=0.75, num_inference_steps=50):

"""

Generate an image from an input image using Stable Diffusion's Img2Img pipeline.

Args:

prompt (str): The textual prompt to guide image transformation.

input_image_path (str): Path to the input image.

output_image_path (str): Path to save the generated output image.

strength (float): Degree of transformation (0.0 retains the input image, 1.0 fully transforms it).

num_inference_steps (int): Number of denoising steps for generation.

"""

# Load the Img2Img pipeline from Hugging Face (using the stable diffusion v1.5 model)

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16

)

pipe = pipe.to("cuda") # Use GPU for faster inference

# Open the input image

init_image = Image.open(input_image_path).convert("RGB")

# Generate the output image based on the prompt and input image

result = pipe(

prompt=prompt,

init_image=init_image,

strength=strength,

num_inference_steps=num_inference_steps

)

# The pipeline returns a list of generated images

result_image = result.images[0]

result_image.save(output_image_path)

print(f"Output image saved to {output_image_path}")

if __name__ == "__main__":

prompt = "A vibrant, futuristic cityscape with neon lights"

input_image_path = "input_image.png" # Replace with your input image file

output_image_path = "output_image.png" # The output will be saved here

generate_img2img(prompt, input_image_path, output_image_path)

In this code, the generate_img2img function loads an input image, applies the transformation as guided by the text prompt, and saves the output image. Adjust the strength parameter to control the extent of the transformation.

When working with image-to-image translation, especially in production or public-facing applications, it's important to incorporate guardrails to ensure safe and appropriate content. Consider the following pointers:

---

Text-to-audio generation—most commonly applied to text-to-speech (TTS)—is the process of converting textual input into a natural-sounding audio waveform. The field has evolved from rule-based and concatenative methods to sophisticated deep learning approaches.

Modern TTS systems largely rely on neural networks to generate high-quality, natural-sounding speech. The typical pipeline involves two main stages:

The code below demonstrates a two-stage TTS pipeline using pre-trained models from NVIDIA’s Torch Hub.

import torch

import numpy as np

from scipy.io.wavfile import write

# Load pre-trained models from NVIDIA's torch hub

tacotron2 = torch.hub.load('nvidia/DeepLearningExamples:torchhub', 'nvidia_tacotron2')

waveglow = torch.hub.load('nvidia/DeepLearningExamples:torchhub', 'nvidia_waveglow')

# Set models to evaluation mode

tacotron2.eval()

waveglow.eval()

# Function to synthesize audio from text

def synthesize_text(text):

# Import the text processing module from Tacotron2

from tacotron2.text import text_to_sequence

sequence = np.array(text_to_sequence(text, ['english_cleaners']))[None, :]

sequence = torch.from_numpy(sequence).long()

# Generate mel-spectrogram using Tacotron2

with torch.no_grad():

mel_outputs, mel_outputs_postnet, _, alignments = tacotron2.infer(sequence)

# Generate audio waveform using WaveGlow

with torch.no_grad():

audio = waveglow.infer(mel_outputs_postnet)

return audio.cpu().numpy()[0]

# usage:

text = "Hello, this is a demonstration of text to audio generation using Tacotron 2 and WaveGlow."

audio = synthesize_text(text)

# Save the audio to a WAV file (sample rate is typically 22050 Hz)

write("output.wav", 22050, audio.astype(np.float32))

print("Audio saved as output.wav")

Audio-to-text transcription (or automatic speech recognition, ASR) converts spoken language into written text. Over the years, approaches have evolved from traditional statistical models to modern deep learning architectures.

Modern ASR systems leverage deep learning to directly learn the mapping from raw audio or spectral features to text:

The following Python code demonstrates how to perform audio transcription using the pre-trained facebook/wav2vec2-base-960h model from Hugging Face.

import torch

from transformers import Wav2Vec2ForCTC, Wav2Vec2Tokenizer

import soundfile as sf

import numpy as np

# Load the pre-trained tokenizer and model

tokenizer = Wav2Vec2Tokenizer.from_pretrained("facebook/wav2vec2-base-960h")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-base-960h")

# Load an audio file (ensure it is mono and sampled at 16 kHz)

speech, sample_rate = sf.read("path_to_audio_file.wav")

if sample_rate != 16000:

# If necessary, use librosa to resample the audio

import librosa

speech = librosa.resample(np.asarray(speech), orig_sr=sample_rate, target_sr=16000)

# Tokenize the audio

input_values = tokenizer(speech, return_tensors="pt").input_values

# Get logits from the model

with torch.no_grad():

logits = model(input_values).logits

# Identify the predicted token IDs and decode them to text

predicted_ids = torch.argmax(logits, dim=-1)

transcription = tokenizer.batch_decode(predicted_ids)[0]

print("Transcription:", transcription)

The code below demonstrates how to use OpenAI's Whisper model to transcribe an audio file with timestamps. The functions load the Whisper model, transcribe the audio, and format the timestamps into a human-readable format.

import os

import whisper

def transcribe_audio(audio_path):

"""

Transcribe an audio file using OpenAI's Whisper model with timestamps.

Args:

audio_path (str): Path to the audio file.

Returns:

list: List of dictionaries containing text segments and timestamps.

"""

try:

# Load the Whisper model (using base model for faster processing)

model = whisper.load_model("base")

# Transcribe the audio file with timestamps

result = model.transcribe(audio_path)

return result["segments"]

except Exception as e:

print(f"Error during transcription: {str(e)}")

return None

def format_timestamp(seconds):

"""Convert seconds to HH:MM:SS format"""

hours = int(seconds // 3600)

minutes = int((seconds % 3600) // 60)

seconds = int(seconds % 60)

return f"{hours:02d}:{minutes:02d}:{seconds:02d}"

def transcribe_audios(audio_path):

transcribed_segments = transcribe_audio(audio_path)

if transcribed_segments:

print("\nTranscription:")

# Format output with timestamps

output_lines = []

for segment in transcribed_segments:

start_time = format_timestamp(segment['start'])

end_time = format_timestamp(segment['end'])

text = segment['text'].strip()

formatted_line = f"[{start_time} --> {end_time}] {text}"

print(formatted_line)

output_lines.append(formatted_line)

# Save to file with timestamps, using the same name as the audio file

output_file = os.path.splitext(audio_path)[0] + ".txt"

with open(output_file, "w", encoding="utf-8") as f:

f.write("\n".join(output_lines))

print(f"\nTranscription saved to {output_file}")

else:

print("No transcription segments found.")

if __name__ == "__main__":

import sys

if len(sys.argv) < 2:

print("Usage: python script.py ")

else:

audio_path = sys.argv[1]

transcribe_audios(audio_path)

This complete code uses OpenAI's Whisper model to transcribe an audio file with timestamps and save the output to a text file. Run the script from the command line, passing the audio file path as an argument.

Text-to-video generation is an emerging field that extends the principles of text-to-image synthesis into the temporal domain, enabling the creation of dynamic video content from textual descriptions. This task involves generating a sequence of coherent frames that not only depict a scene but also exhibit smooth transitions over time.

This code demonstrates how to generate a video from a text prompt using Stable Video Diffusion (as described in the paper). The code includes a helper function to load the model pipeline and then uses it to generate video frames that are compiled into an MP4 file using OpenCV.

import cv2

import numpy as np

import torch

from diffusers import StableDiffusionVideoPipeline

def load_text_to_video_model(model_name):

"""

Loads a text-to-video model from the specified model name.

Args:

model_name (str): Identifier for the pre-trained model.

Returns:

StableDiffusionVideoPipeline: The loaded text-to-video model pipeline.

"""

pipe = StableDiffusionVideoPipeline.from_pretrained(

model_name,

torch_dtype=torch.float16

)

pipe = pipe.to("cuda") # Use GPU for faster inference

return pipe

def generate_video_from_text(prompt, num_frames=16, output_file="output.mp4"):

"""

Generate a video from a text prompt using Stable Video Diffusion.

Args:

prompt (str): The textual description for video generation.

num_frames (int): Number of frames to generate.

output_file (str): Path to save the output video.

"""

# Load the Stable Video Diffusion pipeline using the helper function

model_name = "stabilityai/stable-video-diffusion"

pipe = load_text_to_video_model(model_name)

# Generate video frames from the text prompt

video = pipe(

prompt,

num_inference_steps=50,

guidance_scale=7.5,

num_frames=num_frames

)

# The pipeline returns a dict with key 'frames', which is a list of PIL images

frames = video["frames"]

# Get dimensions from the first frame (PIL image: size returns (width, height))

width, height = frames[0].size

fps = 15 # Frames per second

# Write the frames to a video file using OpenCV

fourcc = cv2.VideoWriter_fourcc(*"mp4v")

video_writer = cv2.VideoWriter(output_file, fourcc, fps, (width, height))

for frame in frames:

# Convert PIL image (RGB) to OpenCV image (BGR)

frame_cv = cv2.cvtColor(np.array(frame), cv2.COLOR_RGB2BGR)

video_writer.write(frame_cv)

video_writer.release()

print(f"Video saved to {output_file}")

if __name__ == "__main__":

prompt = "Waves crashing against a rocky coastline during a storm, with lightning flashing in the dark clouds above."

generate_video_from_text(prompt)

In this code, the load_text_to_video_model function loads the Stable Video Diffusion pipeline using the model identifier "stabilityai/stable-video-diffusion" from Hugging Face. The generate_video_from_text function then generates video frames based on the text prompt and assembles them into an MP4 video file.

Generative AI is revolutionizing visual content creation by enabling a wide array of advanced editing and enhancement tools. Applications such as Creative Upscale, Enhance, and Upscale allow users to enlarge and refine images with impressive detail preservation, while Search & Replace, Erase, and Inpaint facilitate precise modifications and seamless restoration of image components. Tools like Outpaint and Zoom Out extend existing images beyond their original boundaries, creating immersive, expanded scenes. Additionally, features such as Remove Background and BeforeAfter comparisons simplify complex editing tasks by isolating and highlighting specific elements.

Beyond static image manipulation, generative AI extends its capabilities to dynamic and multi-dimensional media. With Sketch Control and Sketch to Image, rough ideas are transformed into polished visuals, while Structure Control ensures consistency in composition. For video applications, functionalities like Image to Video and Video Search & Replace empower users to generate and edit dynamic content, and Image to 3D techniques convert 2D visuals into detailed three-dimensional models. Together, these innovations merge artistic vision with automated precision, streamlining creative workflows across various visual mediums.

Text-Based Generations

Text-to-3D: Generate 3D models from text prompts.

Text-to-Animation: Create animations from text descriptions.

Text-to-Code: Convert natural language prompts into code.

Text-to-Music: Generate music from text prompts.

Text-to-Game: Generate game assets, mechanics, or full games.

Text-to-Graph: Convert text into structured knowledge graphs.

Text-to-emoji or memes: Generate emojis or memes from text prompts.

Image-Based Generations

Image-to-Text: Generate captions or descriptions for images.

Image-to-3D: Convert 2D images into 3D models.

Image-to-Music: Create music inspired by an image’s theme.

Image-to-Sketch/Painting: Transform images into artistic styles.

Audio-Based Generations

Audio-to-Image: Generate images based on sounds or music.

Audio-to-Video: Create visual animations from audio.

Audio-to-Audio: Convert one style of audio into another.

Audio-to-Music: Generate music from spoken descriptions or humming.

Video-Based Generations

Video-to-Text: Generate summaries or captions for videos.

Video-to-Video: Transform video styles (e.g., real to anime).

Video-to-3D: Extract 3D information from video frames.

Other Unique Modalities

Brainwaves-to-Text/Audio/Image: Interpret brain signals for content generation.

Motion-to-Video: Create motion animations from skeletal movement data.

Haptic-to-Text: Generate text descriptions based on tactile input.

"We could only be a few years, maybe a decade away."

"We are at 'peak data'; the way AI is built is about to change."