Human Senses, Cognition, and the Promise—and Peril—of AI

Human senses are our primary means of interacting with the world. The six fundamental sensory faculties—eye/vision (cakkh-indriya), ear/hearing (sot-indriya), touch/body/sensibility (kāy-indriya), tongue/taste (jivh-indriya), nose/smell (ghān-indriya), and thought/mind (man-indriya)—not only help us gather data about our surroundings but also shape our experiences, emotions, and understanding of reality. In particular, the thought/mind faculty distinguishes human cognition by processing abstract concepts, memories, and emotions to enable reasoning, creativity, introspection, and ethical decision-making.

While our physical senses provide raw inputs, the thought/mind faculty integrates these inputs with our personal experiences and knowledge to create a deep, subjective understanding of the world. This integrated cognitive process underlies our ability to adapt, empathize, and make moral judgments—capabilities that remain uniquely human.

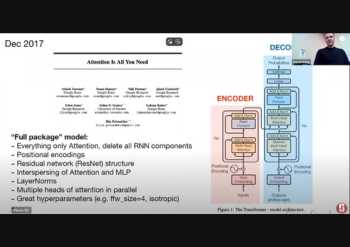

In contrast, technological advancements have enabled machines to mimic many aspects of our sensory systems. Cameras, microphones, tactile sensors, chemical sensors, and gyroscopes serve as artificial equivalents to vision, hearing, touch, taste, smell, and balance. Robotics and speech synthesis further replicate motor skills and language functions. Moreover, modern AI is evolving to combine multiple sensory inputs—what we call multimodal capabilities—to analyze images, interpret speech, and generate context-aware responses in fields such as healthcare, accessibility, and autonomous driving.

The Critical Distinction: Senses vs. Cognition

Machines may be excellent at processing data quickly and accurately, but they lack the inherent consciousness and subjective experience that the human thought/mind faculty provides. While AI systems can detect patterns and execute complex tasks, they do so without genuine introspection or ethical judgment. For example, an autonomous vehicle's sensors and algorithms allow it to react to obstacles, yet it does not "feel" fear or consider the moral weight of its decisions. This fundamental gap explains why AI—even as it augments our decision-making—remains limited in replicating the holistic, value-laden experiences that arise from human cognition.

AI: A Tool of Great Promise and Serious Peril

The promise of AI lies in its potential to enhance human capabilities. AI can improve medical diagnostics through advanced imaging, optimize logistics with data-driven insights, and personalize education by integrating diverse sensory data. Its multimodal nature enables it to process vast amounts of information and deliver rapid, efficient solutions that complement human effort.

However, as AI systems grow more sophisticated, concerns arise about their capacity to surpass human intelligence and operate beyond our control. Experts warn that if AI were to become superintelligent, it might execute decisions that conflict with human ethics or even endanger human existence. For instance, while AI can help optimize manufacturing or streamline services, it might also lead to job displacement, privacy invasions, and ethical dilemmas—especially when its decision-making lacks the nuance and moral intuition derived from our thought/mind faculty.

The potential risks underscore the need for robust ethical frameworks and regulatory measures. Proactive governance—including transparency, accountability, and ongoing collaboration among governments, industry leaders, and the public—is essential to ensure that AI development aligns with human values. Initiatives that address data privacy, algorithmic bias, and explainability are critical to mitigating potential harms while harnessing AI's benefits.

In Conclusion

AI is a powerful tool that can augment human capabilities and drive innovation. Yet its lack of true consciousness and ethical intuition—a product of our uniquely human integration of sensory experiences and reflective thought—remains a critical limitation. By clarifying the distinction between mere sensory replication and the rich, embodied nature of human cognition, we can better appreciate both the promise and the perils of AI. With cautious, ethically informed development and governance, we have the opportunity to harness AI's potential as a partner in progress rather than a threat to human existence.